The Illusion of Innocence: Unveiling the Dangers of AI Kids’ Videos

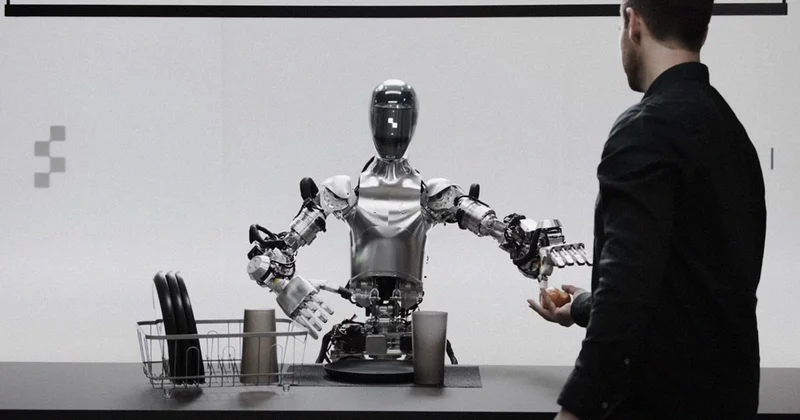

In the vast digital landscape, a concerning phenomenon is quietly taking shape – the surge of AI-generated content specifically aimed at captivating young, impressionable minds. Recent revelations from Wired shed light on a disquieting trend: AI scammers are exploiting generative tools to churn out a deluge of nonsensical and oftentimes bizarre videos targeting children, masquerading under the guise of innocent entertainment. These AI-spun creations, mimicking the popular style of shows like Cocomelon, are surreptitiously infiltrating platforms like YouTube Kids, where they amass views and subscribers while evading detection as artificial constructs.

The urgency of addressing this insidious incursion cannot be overstated. The implications of toddlers and young children consuming copious amounts of AI-generated content, devoid of human curation and educational oversight, are profound and alarming. The pivotal developmental phase during early childhood, crucial for nurturing cognitive faculties and emotional intelligence, is now under siege by content engineered not for genuine enrichment but for profit-driven manipulation. With automated algorithms dictating what reaches young viewers, the risks posed to their cognitive development and socialization are immense.

Therefore, it is imperative that immediate attention and stringent regulations be put in place to combat the proliferation of AI-generated videos designed for children. The exponential growth of this synthetic content threatens to erode the very fabric of childhood innocence and genuine educational experiences, ushering in a dystopian era where young minds are unwittingly subjected to a digital deluge of mind-numbing and potentially harmful stimuli. The onus falls on platforms, regulatory bodies, and society as a whole to safeguard the cognitive well-being of our future generations by countering this perilous trend with vigilance and proactive measures.

Unmasking the Algorithmic Puppeteers: Tools Behind AI-Generated Kids’ Content

AI scammers are employing a plethora of generative tools to churn out nonsensical and often bizarre YouTube kids’ videos that are surreptitiously infiltrating the screens of young, unsuspecting viewers. Among the tools leveraged are OpenAI’s ChatGPT for scripting purposes, ElevenLabs’ voice-generating AI for audio elements, as well as Adobe Express AI suite and many more. These tools are orchestrated to mass-produce videos ranging from short clips to lengthy episodes, all designed to captivate the attention of children. The ease with which these AI programs can generate content in the style of popular kids’ shows like Cocomelon is both impressive and alarming.

The style and content of these AI-generated videos bear striking similarities to those of beloved children’s programs like Cocomelon, making them all the more enticing to young viewers. The colorful animations, catchy tunes, and engaging storylines mimic the format of popular shows, creating a seamless blend that can easily deceive both children and parents. What sets these AI-generated videos apart is the lack of clear markers indicating their synthetic origin. Unlike traditional content that is created by human hands, these AI-generated productions often lack the tell-tale signs that would alert viewers to their artificially crafted nature.

Despite their synthetic nature, these AI-generated kids’ videos have managed to amass a staggering number of views and subscribers on platforms like YouTube. The lack of discernible markers designating them as AI-generated has allowed them to infiltrate the screens of young children unnoticed. Parents, unaware of the origins of these videos, may inadvertently expose their kids to content that has been churned out by algorithms rather than crafted with educational or developmental intent. The sheer popularity and viewership of these AI-generated videos targeting children underscore the need for heightened awareness and vigilance when it comes to digital content consumption among the youngest members of society.

Beyond Innocence: Decoding the Cocomelon Connection in AI Kids’ Videos

The lack of consultation with childhood development experts regarding the creation of AI-generated content aimed at children raises significant concerns about the potential negative impact on children’s cognitive development. Without input from professionals who understand the nuances of childhood learning and psychology, these videos may not be appropriately tailored to support healthy cognitive growth. Experts warn that exposure to poorly designed content could hinder rather than enhance children’s development, leading to a host of issues as they mature.

Moreover, the implications of prolonged exposure to AI-generated content on young minds are a point of contention among experts in the field. Some argue that the constant consumption of such content, which may lack educational value or proper developmental scaffolding, could have lasting effects on children’s cognitive abilities and behavior. As the Wired report highlights, the mass production of AI-spun videos without expert oversight is a troubling trend that could shape a generation of children in ways that are not yet fully understood.

Tufts University neuroscientist Eric Hoel echoes these concerns, emphasizing the dystopian nature of toddlers being exposed to synthetic runoff without proper consideration for their cognitive well-being. His perspective underscores the urgency of addressing the unregulated dissemination of AI-generated content targeted at impressionable young children. Hoel’s insights serve as a stark reminder of the responsibility that content creators, platforms, and regulators bear in safeguarding the developmental experiences of the youngest members of society.

In light of these criticisms and expert opinions, it is clear that the unchecked proliferation of AI-generated children’s content poses a significant threat to the cognitive development and well-being of young audiences. As discussions around the ethical and developmental implications of such content continue to evolve, it is essential to prioritize the involvement of childhood development experts to ensure that children’s media consumption aligns with their developmental needs and best interests.

Lost in the Digital Maze: The Absence of Clues in AI Kids’ Content

YouTube, faced with the alarming rise of AI-generated content targeting unsuspecting children, has outlined its strategy to combat this insidious trend. The tech giant’s primary approach, as communicated to Wired, involves placing the onus on creators to self-disclose when they have produced altered or synthetic content that appears genuine. By requiring transparency from content creators, YouTube aims to address the proliferation of AI-spun videos that masquerade as authentic children’s entertainment.

However, YouTube’s reliance on creators’ voluntary disclosure poses significant challenges in practice. The allure of creating AI-generated content lies precisely in its ability to deceive viewers into believing it is human-made, making it unlikely that creators will readily admit to using such technology. This reluctance to disclose the use of AI tools undermines YouTube’s efforts to stem the flow of misleading and potentially harmful content directed at young audiences.

In addition to self-disclosure, YouTube employs a combination of automated filters, human review, and user feedback to moderate content on its dedicated platform for kids, YouTube Kids. While these measures are intended to safeguard children from inappropriate material, the recent influx of AI-generated videos slipping through the moderation process highlights the limitations of this system. The sheer volume of content uploaded daily, coupled with the intricacies of detecting AI-generated videos, underscores the challenges YouTube faces in effectively policing its platforms.

Critics, including Tracy Pizzo Frey from Common Sense Media, emphasize the critical importance of meaningful human oversight, particularly when dealing with generative AI content. The current reliance on self-reporting by creators and automated moderation mechanisms falls short in ensuring the safety and quality of children’s content on YouTube. As concerns mount over the impact of AI-generated videos on young viewers, the efficacy of YouTube’s moderation efforts comes under scrutiny, raising pressing questions about the platform’s responsibility in protecting its most vulnerable users.

Screen Time Sorcery: The Allure and Alarming Reach of AI Kids’ Videos

In the wake of the alarming revelations about AI-generated content targeting children, the paramount need for stringent regulation and oversight in moderating generative AI content has become unequivocally clear. The absence of human discernment in the creation and dissemination of these videos underscores the critical importance of meaningful human oversight. As toddlers across the nation unwittingly ingest synthetic runoff masquerading as educational content, the necessity for a human touch to sift through and filter out harmful material is more urgent than ever. Without such oversight, AI scammers will continue to exploit the digital landscape for profit, regardless of the potential implications for young, impressionable minds.

Media literacy organizations find themselves at the vanguard of advocating for children’s online safety in the face of this insidious onslaught of AI-generated videos. These groups play a crucial role in raising awareness about the deceptive nature of such content and educating parents on how to navigate the digital realm with caution. By shedding light on the dangers posed by unregulated AI content, these organizations empower caregivers to make informed decisions regarding their children’s media consumption habits, thereby mitigating the risks associated with unchecked exposure to inappropriate material.

The escalating concerns surrounding AI-generated videos targeting children have sparked widespread calls for regulatory intervention to shield young viewers from the deleterious effects of such content. As the boundaries of digital content creation continue to expand, it has become imperative for regulatory bodies to step in and establish guidelines that safeguard children from being exploited by unscrupulous AI scammers. Striking a balance between technological innovation and ethical considerations is paramount in ensuring that AI content creation remains a force for good, rather than a vehicle for manipulation and harm.

In navigating the complex landscape of AI content creation, it is essential to weigh the potential benefits of innovation against the ethical considerations that underpin the protection of vulnerable audiences, especially children. As the digital realm evolves at a rapid pace, it is incumbent upon creators, platforms, and regulatory authorities to collaborate in upholding ethical standards that prioritize the well-being and safety of young viewers. By fostering a culture of responsible content creation and consumption, we can harness the power of AI for positive impact while safeguarding the minds and futures of the next generation.

The Silent Experts: Unheard Voices in AI Kids’ Content Creation

With that said, the alarming revelation of AI-generated content targeting children raises significant risks that cannot be ignored. The synthetic runoff of these videos, designed without human insight or educational expertise, poses a genuine threat to the developing minds of young viewers. The deceptive nature of these videos, mimicking popular children’s content styles like Cocomelon, not only lures in unsuspecting parents but also exposes children to potentially harmful content that lacks proper oversight.

The urgency to implement stricter regulations and oversight measures in the realm of children’s online content has never been more pressing. As AI scammers continue to exploit the digital landscape for financial gain at the expense of impressionable young minds, it becomes imperative for platforms like YouTube to take proactive steps in monitoring and flagging AI-generated content. Simply relying on creators to self-disclose such content is insufficient, given the ease with which these videos can evade detection and reach millions of viewers.

Safeguarding children’s online experiences and cognitive development must be prioritized by both tech companies and regulatory bodies. The responsibility of ensuring a safe and enriching digital environment for children cannot rest solely on the shoulders of families. Collaborative efforts between industry stakeholders, child development experts, and policymakers are essential to combat the proliferation of harmful AI-generated content and uphold standards that prioritize children’s well-being over profit margins. By working together to enforce stringent regulations and promote media literacy, we can strive to protect the innocence and cognitive development of the next generation in an increasingly digital world.