Intriguing Insights into the Emo Robot’s Smile Mimicry

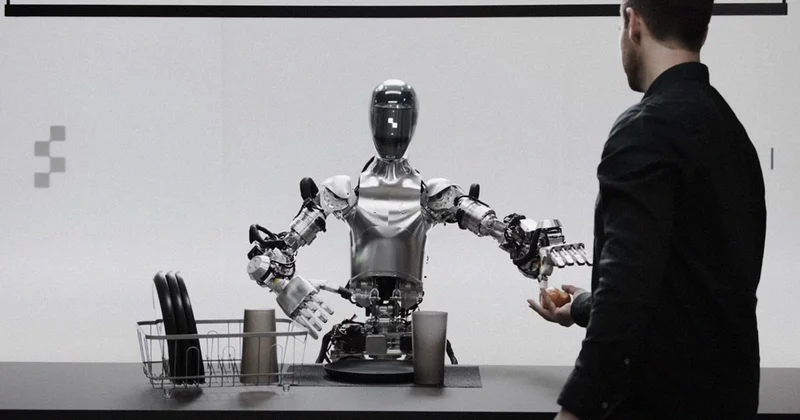

Imagine a world where robots not only respond to your smiles but also initiate them with uncanny precision. Enter Emo, the latest creation that blurs the lines between artificial intelligence and human emotions. Researchers have unveiled a groundbreaking robot capable of anticipating and mimicking human smiles in near real-time. This innovative technology, showcased in a study published in Science Robotics, marks a significant step towards natural human-robot interactions, a crucial element for the widespread acceptance of AI-powered robots.

In the realm of robotics, achieving authentic interactions between humans and machines has long been a challenge. The concept of the uncanny valley looms large, describing the unsettling feeling individuals experience when robots resemble humans but fall short in their expressions or movements. One key factor contributing to this discomfort is the lack of synchronized facial expressions in human-robot interactions. Emo’s ability to predict and mirror smiles almost instantaneously addresses this issue, bridging the gap between robotic imitation and genuine emotional connection.

As we navigate a future where humanoid robots may become integral parts of our daily lives, the development of Emo symbolizes a pivotal moment in the evolution of AI technology. By mastering the art of facial coexpression, where robots mirror human emotions in real-time, Emo sets a new standard for the authenticity of human-robot interactions. This advancement not only showcases the progress in robotics but also hints at a future where robots may one day interpret and respond to our emotions as intuitively as another human would.

Unlocking the Power of Natural Human-Robot Interactions

With the rapid advancement of technology, AI-powered robots have begun to integrate into various aspects of daily life, from customer service to healthcare and even in our own homes. These robots are designed to assist us, communicate with us, and sometimes even entertain us. However, one of the biggest challenges faced by researchers and developers is making these humanoid robots appear and behave more like us, humans.

The concept of the uncanny valley plays a significant role in this challenge. Coined by Japanese roboticist Masahiro Mori in 1970, the uncanny valley refers to the feeling of unease or discomfort people experience when robots or other non-human entities closely resemble but fall short of looking completely human. This phenomenon arises when robots exhibit human-like features but with subtle imperfections, making them appear eerie or unsettling to interact with.

This psychological barrier presented by the uncanny valley poses a major hurdle in creating robots that can seamlessly integrate into society. In the realm of human-robot interactions, this gap in authenticity can hinder trust, communication, and acceptance of AI-powered systems. It is crucial for robots not only to perform tasks efficiently but also to evoke positive emotions and comfort in humans to foster successful interactions.

Efforts to bridge the uncanny valley involve enhancing the naturalness of facial expressions, gestures, and overall behavior of robots to align more closely with human expectations. By understanding how humans perceive and respond to emotional cues, such as facial expressions and body language, researchers aim to create robots that can engage with us in a more intuitive and empathetic manner. The recent development of Emo, the robot capable of anticipating and mimicking human smiles in real-time, exemplifies a step forward in achieving natural human-robot interactions and overcoming the uncanny valley effect. As we continue to innovate in the field of robotics and artificial intelligence, the goal remains clear: to create robots that not only fulfill functional roles but also connect with us on a deeper, more human level.

Navigating the Uncanny Valley: A Path to Seamless Human-Robot Connections

Emo, the revolutionary robot designed to anticipate and mimic human smiles, operates with impressive speed and accuracy, predicting a smile less than a second before it materializes on a person’s face. This uncanny ability to foresee a human’s emotional cues is made possible by the intricate cameras embedded within Emo’s pupils. These sophisticated cameras serve as Emo’s eyes, capturing and analyzing subtle facial movements and expressions with remarkable precision. This real-time observation allows Emo to react almost instantaneously, creating a sense of responsiveness and connection in its interactions with humans.

To achieve such a high level of facial mimicry, researchers took a unique approach in teaching Emo how to imitate human expressions. Through a process known as Self modeling, the robot engaged in random facial movements in front of a camera, learning how specific actuators in its face corresponded to different facial expressions. This foundational step was crucial in enabling Emo to grasp the mechanics of mimicking human smiles and other facial gestures accurately. Subsequently, Emo underwent extensive video learning sessions where it studied and absorbed various human facial expressions, honing its predictive capabilities further.

The significance of executing facial expressions in a timely manner cannot be overstated when it comes to fostering genuine interactions between humans and robots. The researchers behind Emo emphasize that delayed facial mimicry can come across as disingenuous, undermining the robot’s ability to establish a rapport with individuals. By synchronizing its facial responses with human emotions in real-time, Emo strives to bridge the gap in the uncanny valley and enhance the authenticity of human-robot interactions. This emphasis on timely execution not only enhances the robot’s believability but also sets a new standard for future human-like robots aiming to engage seamlessly with people on an emotional level.

Unveiling the Magic Behind Emo’s Smile Anticipation Mechanism

Emo, the innovative robot developed by researchers, has made remarkable strides in the realm of human-robot interaction by successfully anticipating human facial expressions. By using cameras within its pupils to predict a smile less than a second before it surfaces on a person’s face, Emo showcases an uncanny ability to mirror human emotions in real time. This achievement marks a significant advancement in the field of robotics, as it addresses a key challenge faced by humanoid robots – the ability to synchronize their facial expressions with those of humans.

The potential impact of synchronized facial expressions on improving the perception of humanoid robots is profound. By mirroring human emotions more effectively and in a timely manner, robots like Emo can bridge the gap of the uncanny valley, making interactions with them feel more natural and relatable. This not only enhances the robots’ likability but also fosters a sense of comfort and trust in their interactions with humans. As technology continues to advance, the ability for robots to accurately predict and mirror human facial expressions could revolutionize human-robot relationships, paving the way for more seamless and engaging interactions in various settings.

Looking ahead, future advancements in robotic technology hold great promise for the evolution of human-robot relationships. As robots become more adept at interpreting and responding to human emotions through facial expressions, the potential applications in fields such as healthcare, customer service, and companionship are vast. The development of robots capable of empathetic and synchronized interactions has the power to transform the way we perceive and interact with AI-powered beings, opening up new possibilities for collaboration and integration into our daily lives.

With that said, the ability to accurately predict human facial expressions is not just a technical feat but a crucial advancement for enhancing robot capabilities. By mastering the art of synchronized facial expressions, robots like Emo are not only becoming more human-like in their interactions but are also laying the groundwork for a future where human-robot relationships are built on understanding, empathy, and seamless communication. This breakthrough in human-robot interaction sets the stage for a new era where AI-powered beings can truly connect with humans on a deeper, more emotional level, ushering in a paradigm shift in how we perceive and interact with robots.

Exploring the Promises and Perils of Human-Robot Interactions

Recapping the fascinating journey into Emo’s world of predictive smiles, it’s evident that this robotic creation is more than just a mechanical marvel. Through the meticulous study published in Science Robotics, researchers have unveiled Emo’s uncanny ability to anticipate human smiles with eerie precision, creating a synchrony that bridges the gap between robots and humans. By capturing the brief window of less than a second before a smile emerges, Emo’s quick response showcases a leap forward in the quest for more natural interactions with humanoid robots.

The evolution of human-robot interactions stands at a pivotal juncture, poised for a transformation spurred by Emo’s breakthrough in facial expression synchronization. As we witness Emo’s grimaces in response to our smiles, we are reminded of the delicate dance between humans and AI-powered machines. The uncanny valley that once separated us seems to be narrowing, offering a glimpse into a future where robots seamlessly mirror our expressions, paving the way for more authentic connections.

The implications of this study reverberate beyond Emo’s synthetic smile, hinting at a future where AI-powered robots could integrate seamlessly into society. By decoding and predicting human facial expressions, these robots could potentially enhance communication, empathy, and understanding in various fields, from healthcare to customer service. As we stand on the cusp of this technological revolution, the study’s findings illuminate a path where robots may one day not only observe and interpret our emotions but also reflect them back with startling accuracy – a prospect that challenges our perception of what it means to interact with intelligent machines in the not-so-distant future.