Innovative Approaches to Overcoming Data Scarcity in AI Development

In the fast-evolving realm of artificial intelligence (AI) development, a new challenge has emerged on the horizon – the looming scarcity of data. As AI companies continue to push the boundaries of innovation, they are finding themselves confronted with a pressing dilemma: the internet may soon no longer be vast enough to supply the copious amounts of data required to fuel the advancement of their sophisticated AI models.

Data training lies at the heart of AI model development, serving as the bedrock upon which these intelligent systems are built. The quality and quantity of data utilized in training directly impacts the performance and capabilities of AI models, making it a critical component in the quest for enhanced artificial intelligence.

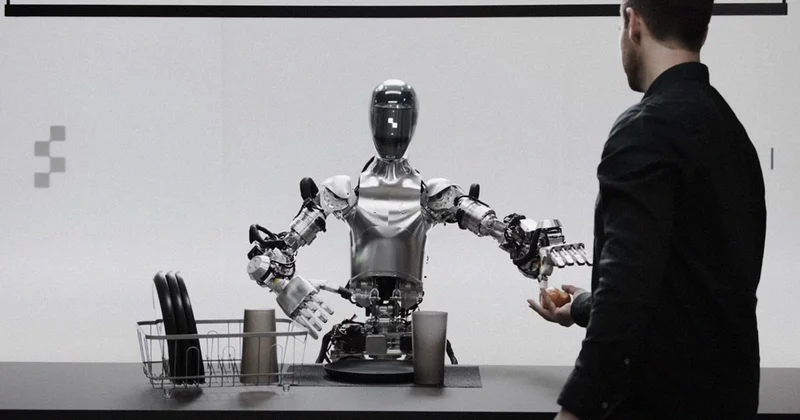

In response to the escalating data scarcity issue, AI companies are fervently exploring alternative data sources and innovative solutions to circumvent potential roadblocks. Some pioneering approaches include tapping into publicly-available video transcripts, leveraging AI-generated “Synthetic data,” and venturing into uncharted territories of data training methodologies. These unconventional strategies reflect the industry’s relentless pursuit of ingenuity to surmount the challenges posed by data limitations, propelling AI development into uncharted territories of creativity and controversy.

Navigating the Boundaries of Data Training in AI Models

In the fast-paced realm of artificial intelligence (AI) development, a significant challenge looms large: the scarcity of data for training advanced AI models. The exponential growth in the size and complexity of AI models has outpaced the capacity of the internet to provide the necessary data. This bottleneck threatens to impede the progress of AI innovation, as companies find themselves in a data scarcity quandary.

Data scarcity presents a multifaceted problem for AI developers. With the internet becoming increasingly insufficient to supply the vast amounts of data required for training sophisticated models, companies are forced to explore alternative sources. This shortage of training data can hamper the development of AI models, limiting their capabilities and potential applications. Without access to diverse and abundant data, AI models may lack accuracy, robustness, and adaptability, hindering their effectiveness in real-world scenarios.

To address the data scarcity challenge, some forward-thinking companies are actively seeking alternative data training methods. In light of the internet’s limitations, innovative approaches are being explored, such as utilizing publicly-available video transcripts and experimenting with AI-generated “synthetic data.” Companies like Dataology, founded by industry experts like Ari Morcos, are pioneering efforts to train larger and smarter models with reduced data and resources. Meanwhile, organizations like OpenAI and Anthropic are delving into controversial avenues, such as training AI models on public YouTube video transcriptions and developing higher-quality synthetic data to mitigate the impact of data shortages on AI development.

As the AI landscape grapples with the impending data scarcity crisis, these companies’ pursuit of novel data training methods underscores the industry’s resilience and adaptability in overcoming challenges to propel AI innovation forward.

Revolutionizing Data Training Strategies in AI Evolution

In the ever-evolving realm of artificial intelligence, the quest for bigger and more advanced models has led to a pressing issue: the internet’s finite capacity to provide the necessary data for training. To address this challenge, innovative solutions are being explored by leading AI companies. One such alternative gaining traction is the utilization of publicly-available video transcripts as a valuable data source. By tapping into the vast repository of videos online, companies are able to extract rich textual data to fuel their AI models’ learning process.

In a groundbreaking move, AI-generated Synthetic data has emerged as a novel approach to training artificial intelligence systems. This synthetic data, which is artificially generated by AI algorithms, offers a seemingly limitless well of training material, potentially circumventing the constraints posed by data scarcity. However, the adoption of synthetic data in AI training has sparked a heated debate within the research community. Critics caution that relying too heavily on AI-generated data could lead to what has been ominously termed “Inbreeding” in AI models, resulting in a phenomenon known as “Model collapse” or “Habsburg AI.” Despite these concerns, companies like Anthropic are pioneering the development of higher-quality synthetic data to mitigate such risks and ensure the integrity of their AI models.

Meanwhile, companies such as Dataology are embarking on a different path by exploring ways to train larger and smarter models with less data and resources. By focusing on optimizing the efficiency of data utilization, these companies are striving to push the boundaries of AI capabilities while conserving valuable resources. However, the landscape of data training is not without controversy, as behemoths like OpenAI delve into uncharted territory by considering unconventional methods for training AI models. Among these approaches is the potential training of GPT-5 on transcriptions sourced from public YouTube videos, a move that underscores the industry’s willingness to push the boundaries of data acquisition.

As debates surrounding the use of synthetic data in AI training intensify, the industry stands at a pivotal juncture where innovation and ethics intersect. The quest for sustainable data training solutions underscores the complex interplay between technological advancement and ethical considerations, shaping the future trajectory of artificial intelligence development.

Pioneering Solutions to the Data Scarcity Predicament in AI

In the ever-evolving landscape of artificial intelligence, companies are constantly pushing the boundaries of innovation to address the challenges posed by a potential shortage of training data. One notable initiative comes from Dataology, founded by esteemed ex-Meta and Google DeepMind researcher Ari Morcos. Dataology stands out for its pioneering efforts to explore new avenues for training larger and smarter AI models with reduced data and resources. Morcos, drawing upon his wealth of experience in the field, aims to revolutionize the way AI systems are trained, offering a promising solution to the looming data scarcity dilemma.

Meanwhile, OpenAI is at the forefront of discussions surrounding the utilization of public data sources for training models. With the internet reaching its limits in providing the vast amount of data necessary for advanced AI models, the company is considering unconventional approaches. The prospect of training GPT-5 on transcriptions from public YouTube videos reflects OpenAI’s commitment to exploring alternative data sources to fuel the next generation of AI technologies. However, these ventures raise ethical concerns and transparency issues, as questions linger about the origins and implications of such data training methods.

On a parallel track, Anthropic, a company established in 2021 with a focus on developing safer and more ethical AI models than its predecessors, is actively engaged in creating purportedly higher-quality synthetic data. By investing resources into crafting synthetic data sets of superior quality, Anthropic positions itself as a key player in navigating the ethical complexities of AI development. While the specifics of their synthetic data creation remain guarded, the company’s dedication to fostering a more transparent and conscientious approach to data training underscores a pivotal shift in the AI industry towards ethical considerations and accountability.

Charting a Course Towards Data-Driven AI Advancements

Researcher perspectives on the looming possibility of AI running out of usable training data vary, with some expressing concern while others remain optimistic about potential solutions. Pablo Villalobos, a prominent figure in the field, acknowledges the challenges ahead but is quick to point out that panic is not warranted just yet. Villalobos believes that despite estimates suggesting AI could exhaust its training data within a few years, the key lies in embracing innovation and anticipating groundbreaking developments. “The biggest uncertainty,” Villalobos highlighted in a recent interview, “Is what breakthroughs you’ll see.” This sentiment reflects a cautious optimism regarding the future trajectory of AI data training.

As uncertainties loom over the availability of sufficient training data, researchers and industry players are exploring novel solutions and potential breakthroughs to address this issue. The debate around the use of synthetic data as an alternative has gained traction, with companies like Anthropic and OpenAI venturing into uncharted territory. While the concept of training AI models on AI-generated data has raised concerns about “model collapse” akin to “Habsburg AI,” proponents argue that higher-quality synthetic data may offer a viable path forward. The secretive nature of these endeavors underscores the complexity and sensitivity surrounding novel data training methods, leaving many intrigued about the potential outcomes.

Additionally, the environmental impact of AI development is coming under increased scrutiny, with critics pointing to the significant energy consumption and resource-intensive nature of training larger models. The call for a more sustainable approach to AI innovation is gaining traction, as concerns about the environmental footprint of AI technologies grow louder. As the industry grapples with the dual challenges of data scarcity and environmental sustainability, the need for responsible and forward-thinking practices becomes increasingly apparent. Balancing progress with ethical and environmental considerations will be essential in shaping the future trajectory of AI development.

Balancing Innovation and Ethics in the Quest for AI Data Solutions

With that said, the data scarcity challenge in AI development looms large as companies strive to build more sophisticated models that outpace the current availability of training data. The rapid growth of AI technologies has brought to the forefront the pressing need for alternative data training methods. As the internet’s capacity to provide the necessary data diminishes, innovative solutions such as utilizing publicly-available video transcripts and synthetic data generation are gaining traction. Companies like Dataology and Anthropic are exploring ways to train larger and smarter models with minimal data resources, emphasizing the importance of adapting to changing circumstances in the AI landscape.

Moreover, the ethical considerations and transparency in AI model development cannot be overstated. The debate around synthetic data and its potential implications highlights the necessity of maintaining ethical standards and ensuring accountability in the AI industry. OpenAI’s discussions on training models with YouTube data underscore the need for clear guidelines and ethical frameworks to govern data usage in AI development. Transparency regarding data sources and model training processes is crucial to building trust and safeguarding against potential misuse of AI systems.

Moving forward, a balanced approach to AI model development must take into account both data limitations and environmental impact. While the quest for larger and more powerful models drives innovation, it is equally essential to consider the environmental consequences of resource-intensive AI training processes. Embracing sustainable practices, such as optimizing energy consumption and reducing reliance on rare-earth minerals, can help mitigate the environmental footprint of AI development. By prioritizing ethical considerations, transparency, and sustainability, the AI industry can navigate the challenges posed by data scarcity while fostering responsible technological advancement for the future.