**Exploring the Water Consumption Landscape in China’s AI Data Centers**

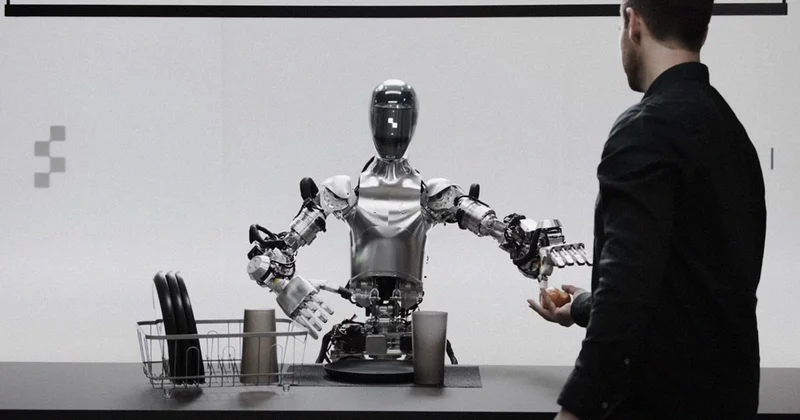

China’s relentless pursuit of AI innovation is propelling the country into a data center expansion frenzy, with significant consequences for water consumption. As the global epicenter of artificial intelligence, China is strategically positioning itself to lead in this technological revolution. This momentum has triggered a massive surge in the construction and operation of data centers, essential for training and maintaining AI models. However, the cost of this advancement comes in the form of an unprecedented demand for water.

The scale of this water consumption is staggering, with China on track to utilize an estimated 343 billion gallons of water in its data centers. This vast quantity is equivalent to the residential water usage of 26 million people. Looking ahead, projections indicate that by 2030, this figure could skyrocket to a jaw-dropping 792 billion gallons. To put this into perspective, this amount of water could satisfy the needs of the entire population of South Korea. This extraordinary demand for water underscores the intensive nature of AI model training and the critical role that water plays in cooling down the hardware within these data centers.

This rapid expansion of data centers, coupled with the insatiable thirst for water, paints a concerning picture of the environmental impact of AI development in China. The convergence of cutting-edge technology and resource-intensive infrastructure raises alarms about sustainability and the strain on precious water resources. As China marches towards a future shaped by AI, mitigating the environmental footprint of this digital revolution becomes an urgent imperative to navigate the delicate balance between technological advancement and ecological preservation.

**Navigating the Nexus of Energy and Water in AI Model Training**

The energy demands of training AI models have reached unprecedented levels, leading to a substantial consumption of water for cooling data center hardware. As AI technologies advance, the need for training and maintaining these models poses a significant challenge in managing resources sustainably. With China set to triple its number of data centers by 2030, the demand for water to cool down the hardware is expected to skyrocket, potentially reaching around 792 billion gallons, an amount equivalent to the entire water needs of a nation like South Korea.

The intricate relationship between the energy demands of AI models and the use of water for cooling signifies a pressing issue that requires immediate attention. As companies strive to keep their data centers from overheating, they heavily rely on water as a coolant, inadvertently contributing to the strain on water resources. The staggering amount of water projected to be consumed in Chinese data centers underscores the urgent need for more sustainable practices in managing the energy and cooling requirements of AI infrastructure.

The exponential growth in data centers, particularly in China, paints a concerning picture of the environmental impact of AI development. The projected tripling of data centers by 2030 highlights the escalating demand for resources, especially water, in powering the AI revolution. As the world grapples with the implications of such intensive energy and water usage, it becomes clear that proactive measures are essential to address the growing environmental footprint of AI technologies.

**Unveiling the Global Water Footprint of Tech Giants**

China is not the only player grappling with the water demands of artificial intelligence (AI). In the race to harness AI capabilities, tech giants like OpenAI, Microsoft, and Google have been inadvertently fueling a surge in water consumption. Last year, OpenAI, in partnership with Microsoft, utilized a staggering 185,000 gallons of water solely in training the GPT-3 model, a volume equivalent to cooling an entire nuclear reactor. Similarly, Google’s 2023 Environmental Report disclosed a jaw-dropping consumption of 5.6 billion gallons of water in 2022. These mind-boggling figures underscore the immense water requirements entailed in developing and maintaining AI technologies.

The energy-intensive nature of AI chatbots further exacerbates the water usage dilemma. When 100 million users engage with OpenAI’s ChatGPT, the water toll reaches the scale of 20 Olympic swimming pools. In contrast, conducting the same interactions through basic Google searches would merely require the water of a single swimming pool. This vast discrepancy in water consumption illuminates the stark contrast between traditional online behaviors and the resource-intensive demands of AI-powered solutions. As these chatbots become increasingly ubiquitous in daily interactions, their collective impact on water resources could be profound, especially in regions already facing water scarcity challenges.

The narrative of escalating water usage by tech giants reflects a broader concern regarding the environmental footprint of AI infrastructure. As the global community grapples with the implications of AI’s voracious energy appetite, there is a growing imperative to pivot towards more sustainable practices. Executives like Arm Holdings Plc CEO Rene Haas are sounding the alarm, warning that data centers’ electricity consumption could surpass that of entire nations. In the face of this looming crisis, innovative solutions, such as developing energy-efficient chips for AI models, are heralded as crucial steps towards mitigating the industry’s environmental impact. The quest for sustainability in AI development is not only a technological challenge but a moral imperative to safeguard our planet’s precious resources for future generations.

**Unraveling the Environmental Ripples of AI Data Centers**

Amid the rapid advancement of artificial intelligence, the looming environmental impact of China’s ambitious AI infrastructure expansion is garnering significant attention. Notably, the staggering water consumption associated with the proliferation of data centers is raising concerns over water scarcity. As China triples its data center numbers by 2030, these facilities could potentially guzzle up 792 billion gallons of water, a quantity capable of satisfying the needs of an entire country like South Korea. With the substantial water demands to cool down the hardware that sustains AI models, the delicate balance of water resources is under strain.

Microsoft’s data center in the Arizona desert serves as a stark example of the implications of AI’s thirst for water. Recent reports from The Atlantic shed light on Microsoft’s efforts to downplay the considerable water usage of its data center in a region already grappling with water scarcity issues. This revelation underscores the challenges posed by the exponential growth of data centers for AI development, not just in China but globally. The unchecked escalation of water consumption in data centers could exacerbate the environmental strain on regions already facing limited water resources.

Looking ahead, the challenges of energy and water usage in AI development are poised to escalate further. Predictions indicate that data centers could surpass India’s electricity consumption by the end of the decade, a troubling prospect for global energy sustainability. Arm Holdings Plc CEO Rene Haas has sounded the alarm on the escalating energy demands of AI, emphasizing the urgent need for more energy-efficient solutions to power the relentless growth of AI models. As the world hurtles toward a future where AI may eclipse even the largest nations in energy consumption, the imperative for breakthrough innovations in efficiency becomes increasingly pressing.

**Forecasting the Energy Tsunami in AI Development**

In the relentless pursuit of artificial intelligence (AI) advancement, the world finds itself at a critical juncture where the focus on energy-efficient solutions has never been more imperative. At the heart of this pressing issue lies the pivotal role of energy-efficient chips. These innovative components not only represent a crucial element in the development and operation of AI systems but also serve as a cornerstone for sustainability in the burgeoning tech landscape. As AI technologies continue to burgeon, the demand for computational power escalates, intensifying the strain on energy resources and exacerbating environmental concerns.

Amidst this backdrop, the clarion call for breakthroughs in energy efficiency reverberates with heightened urgency. The exponential growth of data centers and the escalating consumption of water and electricity underscore the necessity for transformative advancements in AI infrastructure. Rene Haas, the CEO of Arm Holdings Plc, underscores this imperative by highlighting the critical need for pioneering solutions that drive energy efficiency to new frontiers. In a world where AI’s thirst for power shows no signs of abating, fostering innovation in energy efficiency holds the key to mitigating the environmental impact of AI proliferation.

Haas’ resounding plea for advancements in AI training and power methods encapsulates a vision for a sustainable AI ecosystem. As the trajectory of AI evolution continues its upward ascent, the onus rests on industry leaders and innovators to forge a path towards a greener, more sustainable future. The quest for energy-efficient solutions stands as a linchpin in this transformative journey, heralding a paradigm shift towards a more mindful and eco-conscious AI landscape. In the words of Haas, the pursuit of efficiency in AI models and infrastructure is not merely a choice but an imperative for safeguarding our planet’s resources and fostering responsible technological progress.

**Pioneering Sustainable Solutions for AI’s Energy and Water Dilemma**

As the demand for artificial intelligence (AI) continues to surge, so does the critical need to address the escalating energy and water consumption associated with AI data centers. To combat the immense environmental impact, it is imperative to prioritize strategies aimed at optimizing energy consumption. By implementing energy-efficient practices and technologies, such as utilizing renewable energy sources and improving cooling systems, data centers can significantly reduce their carbon footprint and water usage.

Furthermore, research and development efforts play a pivotal role in fostering sustainable AI infrastructure. Investing in innovative solutions, such as developing more energy-efficient AI algorithms and hardware, can pave the way for greener practices within the tech industry. By designing AI systems that prioritize energy efficiency without compromising performance, companies can mitigate the ecological repercussions of their operations.

Collaboration among stakeholders is another key component in promoting environmentally friendly AI technologies. By fostering partnerships between tech companies, policymakers, and environmental organizations, a concerted effort can be made to establish standards and regulations that encourage sustainable practices in AI development. Sharing best practices, knowledge, and resources can lead to the creation of industry-wide initiatives that prioritize environmental conservation while advancing the capabilities of AI technology.

Overall, addressing the rising energy and water usage in AI development demands a multi-faceted approach that emphasizes the optimization of energy consumption, the innovation of sustainable infrastructure, and the collaboration for environmentally friendly technologies. By adopting these strategies, the tech industry can navigate the challenges posed by the exponential growth of AI while safeguarding our planet’s precious resources for future generations.