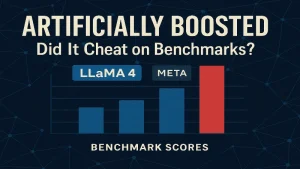

Meta’s LLaMA 4 AI Model Under Fire for Benchmark Performance—Did It Cheat, or Just Outperform?

Meta’s LLaMA 4 is making waves in the artificial intelligence world, but not just for its performance. Accusations have surfaced that the company’s newest large language model may have been trained on benchmark datasets like MMLU or HellaSwag, calling into question the legitimacy of its impressive scores. Meta denies any manipulation, but the controversy has reopened a broader debate around LLM benchmarking, transparency, and AI model evaluation standards.