Exploring the Synthetic Solution: Addressing Training Data Scarcity in AI

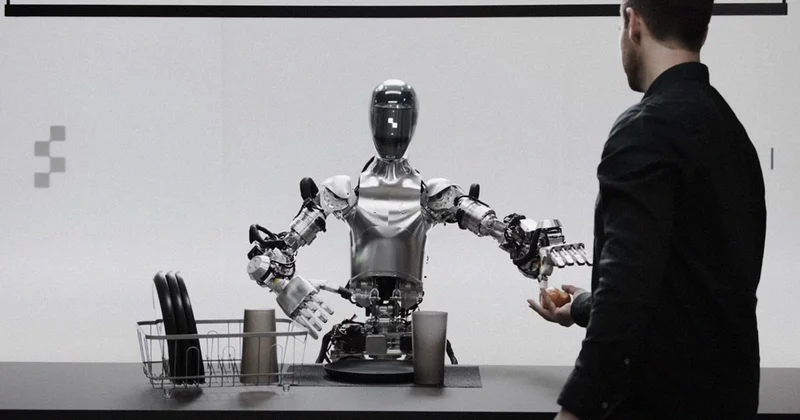

The landscape of artificial intelligence (AI) is undergoing a transformative shift as companies confront a looming shortage of training data crucial for developing robust AI models. With the exponential growth in AI applications across various industries, the demand for high-quality labeled data has outpaced its availability, prompting a quest for innovative solutions. One such solution gaining traction is the concept of synthetic data, a simulated dataset created artificially rather than sourced from real-world observations.

Synthetic data holds the promise of addressing the scarcity of training data by generating an endless supply of labeled examples for AI algorithms to learn from. This approach circumvents the limitations imposed by reliance on finite and often expensive real-world datasets. Proponents argue that if AI systems can be trained effectively on data generated by AI itself, it would not only alleviate the training data shortage but also mitigate concerns related to copyright infringement, a pressing issue in the AI domain.

However, despite its potential benefits, the efficacy of synthetic data in training AI models remains a contentious subject within the scientific community. Companies like Anthropic, Google, and OpenAI are actively exploring the development of high-quality synthetic datasets, but challenges persist. Researchers have encountered issues with what they refer to as “Habsburg AI” or “Model Autophagy Disorder,” describing AI systems that become overly reliant on self-generated data, leading to erratic behaviors and suboptimal performance. The quest for creating synthetic data that can consistently produce reliable and generalizable AI models poses a significant obstacle that researchers are striving to overcome.

Unlocking the Power of Synthetic Data: A Paradigm Shift in AI Training

Synthetic data, in the realm of artificial intelligence (AI) development, presents itself as an intriguing solution to the looming scarcity and quality issues surrounding traditional training data. Unlike conventional datasets derived from real-world observations, synthetic data is artificially generated by AI algorithms. This novel approach aims to address the challenges posed by the finite availability of diverse and high-quality training data required to train advanced AI models effectively. The concept of synthetic data holds the promise of not only alleviating the training data shortages but also potentially circumventing concerns related to copyright infringement in AI systems.

The utilization of synthetic data in AI development offers several notable benefits. One key advantage lies in the generation of vast amounts of diverse and labeled data, which can be instrumental in training complex AI models. By leveraging synthetic data, AI companies can potentially accelerate the model development process, enhance model performance, and explore innovative applications that demand extensive datasets. Moreover, synthetic data presents an opportunity to create customized datasets tailored to specific AI tasks or domains, allowing for greater flexibility and control in model training and evaluation.

When comparing synthetic data to real-world data for AI training purposes, several distinctions become apparent. While traditional training data is derived from authentic observations and experiences, synthetic data is artificially created, often based on algorithms or simulations. Real-world data reflects the complexity and variability of natural environments, capturing nuances and uncertainties inherent in the physical world. In contrast, synthetic data can be designed to exhibit specific characteristics, scenarios, or anomalies that may not be readily available in real-world datasets. However, the challenge lies in ensuring that synthetic data accurately represents the diversity and complexity of the real world, avoiding the pitfalls of overfitting or bias that can hinder AI model performance.

As AI companies navigate the complexities of synthetic data generation, the quest for creating high-quality and reliable synthetic datasets remains a pressing concern. While synthetic data holds immense potential in reshaping AI development, addressing the inherent limitations and ensuring the fidelity and generalizability of synthetic datasets are crucial steps toward harnessing the full benefits of this innovative approach.

Navigating the Landscape: Synthetic Data vs. Real-World Data for AI

Efforts by AI companies such as Anthropic, Google, and OpenAI to pioneer the development of synthetic data have been met with both curiosity and skepticism. In a landscape where the availability of quality training data is becoming increasingly scarce, the concept of creating synthetic data presents itself as a potential solution. These companies are actively exploring the possibilities that synthetic data could offer to alleviate the growing concerns surrounding AI training data shortages and potential copyright infringements.

However, despite the ambitious attempts, challenges abound for AI models trained on synthetic data. The emergence of terms like Habsburg AI and Model Autophagy Disorder sheds light on the inherent issues that arise from such practices. The comparison to the Habsburg dynasty’s inbreeding and the subsequent genetic anomalies it caused serves as a cautionary tale for the AI community. The concept of AI models becoming so heavily reliant on the outputs of other generative AI that they develop exaggerated and grotesque features is a worrying prospect, reminiscent of the Hapsburg jaw.

Richard G. Baraniuk’s concept of Model Autophagy Disorder, where AI models essentially “blow up” after a few generations of inbreeding, underscores the fragility of synthetic data development. As researchers and companies delve deeper into the realm of synthetic data, the pressing question remains – can they navigate the complexities of creating synthetic data that doesn’t lead their systems astray? The approach taken by companies like OpenAI and Anthropic, with a system of checks-and-balances in place where one model generates data and another verifies its accuracy, hints at a potential solution. Nevertheless, the path to successfully harnessing synthetic data is wrought with uncertainties, and only time will tell if the endeavors of these AI companies will bear fruit amidst the challenges they face.

Unveiling the Innovations: AI Companies’ Quest for Synthetic Data

In the quest to improve the quality of synthetic data, AI companies such as OpenAI and Anthropic are pioneering innovative strategies, one of which involves the implementation of a checks-and-balances system. This system, as detailed by the New York Times, aims to address the challenges of ensuring accuracy and reliability in the data generated. By incorporating a dual-model approach, these companies are taking a proactive stance towards enhancing the integrity of synthetic data.

In this setup, the first model is responsible for generating the synthetic data, leveraging advanced algorithms to create a diverse array of information. This initial stage is crucial in laying the groundwork for the dataset that will be utilized to train AI models. Subsequently, the second model comes into play, acting as a verifier that scrutinizes the generated data for consistency, correctness, and adherence to predefined guidelines. This verification step serves as a quality control measure, essential for detecting any anomalies or inaccuracies in the synthetic dataset.

Anthropic, a company founded by former OpenAI employees with a commitment to ethical AI development, has been at the forefront of this initiative. They have adopted a structured approach, known as a “Constitution,” which outlines a set of stringent guidelines for training their two-model system. By adhering to these principles, Anthropic aims to instill a sense of accountability and rigor in the generation and verification of synthetic data. Notably, Anthropic has openly disclosed their use of synthetic data and the meticulous processes involved in training their AI models, exemplifying a transparent and responsible approach in the realm of AI research.

By embracing this checks-and-balances system and refining the roles of the first and second models in generating and verifying synthetic data, companies like OpenAI and Anthropic are paving the way for advancements in AI technology. Through strategic methodologies and a commitment to quality assurance, the potential for synthetic data to revolutionize AI training while mitigating risks of AI copyright infringement is gradually becoming a tangible reality.

Quality Control in the Age of Synthetics: The OpenAI-Anthropic Approach

In evaluating the potential for synthetic data to overcome current challenges in AI development, it becomes apparent that while the concept holds promise, significant hurdles must be cleared before widespread adoption can be considered viable. The allure of synthetic data lies in its ability to address the growing scarcity of training data while potentially sidestepping issues such as copyright infringement. However, as exemplified by the struggles of leading companies like Anthropic, Google, and OpenAI in creating quality synthetic data, it is evident that much work remains to be done.

At the crux of the matter lies the uncertainties surrounding our understanding of AI systems and the implications this has for the successful development of synthetic data. The likening of poorly trained AI systems on synthetic data to “Habsburg AI” or “Model Autophagy Disorder” paints a vivid picture of the risks involved. These terms underscore the dangers of creating AI models that become so heavily reliant on generated data that they lose touch with reality, akin to inbred mutants in need of correction.

Despite the challenges and uncertainties, researchers at OpenAI and Anthropic are pioneering a checks-and-balances approach to synthetic data development to counteract potential pitfalls. By having one model generate the data while another verifies its accuracy, a semblance of quality control is introduced. Anthropic’s transparency in acknowledging the use of internal guidelines for its two-model system, including the training of its latest LLM model on internally generated data, provides a glimpse into the direction synthetic data research is taking.

Overall, the successful implementation of synthetic data in AI technologies hinges on overcoming the current limitations and uncertainties that plague the field. While the prospects are promising, the timeline for achieving a functional and dependable synthetic data solution remains uncertain. As researchers grapple with the challenges posed by the intricate nature of AI systems and the potential consequences of misguided synthetic data, the road to realizing the full potential of synthetic data in AI technologies is fraught with complexity and uncertainty.

Bridging the Gap: Future Horizons and Challenges of Synthetic Data Integration in AI Technologies

In a world where artificial intelligence (AI) is advancing at a breakneck pace, the scarcity of quality training data looms as a formidable challenge. The reliance on copious amounts of data to teach AI systems has led to a bottleneck in the industry, prompting a desperate search for solutions. Enter synthetic data – a tantalizing prospect that promises to alleviate the strain on sourcing authentic datasets. However, as the quest for synthetic data unfolds, the road ahead remains murky and filled with obstacles.

The allure of synthetic data lies in its potential to not only address the shortage of training data but also circumvent issues like copyright infringement in AI. Companies like Anthropic, Google, and OpenAI are at the forefront of this exploration, endeavoring to crack the code on creating synthetic data that can effectively train AI models without encountering the pitfalls of ‘Habsburg AI’ or ‘Model Autophagy Disorder.’ Despite their efforts, the journey towards viable synthetic data remains fraught with challenges, with AI systems often stumbling when built on such fabricated information.

As the debate rages on about the feasibility of synthetic data, the significance of tackling the training data scarcity in AI is more pressing than ever. The need for innovative solutions to sustain the growth and development of AI cannot be overstated. While the current landscape may be riddled with uncertainties and setbacks, the imperative for continued exploration and innovation in AI data training methodologies is crystal clear. The call to action is resounding – the time is now to push the boundaries of what is possible and forge a path towards a future where AI can thrive on data that is as diverse and robust as the challenges it seeks to tackle.